|

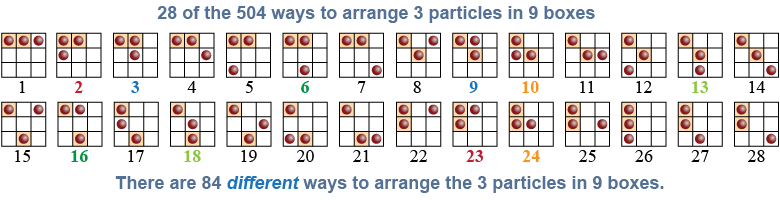

Entropy describes the disorder in a system, which is the number of different ways the system can be arranged internally and yet still have the same macroscopic properties, such as temperature and density. To illustrate the idea, consider a system of nine boxes containing three particles. This system has an average “density” of 0.33 particles per box. There are 504 ways to arrange those three particles in the nine boxes, but many of those arrangements repeat—such as #6 and #16 in the figure below—because the three particles are identical. After removing duplications, there are 84 unique ways to arrange the three particles in the nine boxes. Each of the 84 different ways has the same “density”; therefore, the “entropy” is 84.

|

Why are there 504 ways to arrange those three particles? Consider the first particle: It can be placed in any one of the nine boxes. After you place it, there are eight possible boxes into which the second particle can be placed. After those two are placed, there are only seven remaining boxes into which the third particle can be placed. The total number of permutations is therefore 9 × 8 × 7 = 504. In the mathematical terminology of permutations the total is n × (n − 1) × (n − 2). If we were arranging nine particles, the total number of permutations would be n! or n factorial, which is equal to (n − 1) × (n − 2) × ··· × (2) × (1).

But many of these permutations repeat, because the particles are identical. Since there are three identical particles, we divide by 3! or 3 × 2 × 1. The total number of unique permutations is therefore 504/6 = 84.

|

|

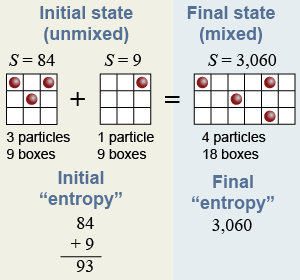

This system exchanges “density” by mixing with another system that has one particle in nine boxes. The other system has an “entropy” of nine because there are nine ways to put one particle in nine boxes. The entropy of the new, 18-box system is 3,060 because there are 3,060 different ways to organize four particles in 18 boxes and all have the same “density” of (4/18). The total entropy, or disorder, has increased from 93 in the original state of the system to 3,060 in the new state.

This system exchanges “density” by mixing with another system that has one particle in nine boxes. The other system has an “entropy” of nine because there are nine ways to put one particle in nine boxes. The entropy of the new, 18-box system is 3,060 because there are 3,060 different ways to organize four particles in 18 boxes and all have the same “density” of (4/18). The total entropy, or disorder, has increased from 93 in the original state of the system to 3,060 in the new state.

|

To explain irreversibility, let the particles constantly collide and redistribute themselves randomly among the 3,060 different arrangements of the 18-box system. What is the chance of going back to the original state of three particles in the first nine boxes and one particle in the other nine boxes? The answer is that there are 84 × 9 = 756 ways for this to happen out of the 3,060 different possibilities, so the chance is 756/3,060, or about 25% that the mixing will spontaneously reverse.

|

One gram of air in an “empty” 1 L soda bottle contains 2×1022 molecules. Entropy measures the number of different ways, or degrees of freedom, in which the individual positions and velocities of each molecule can be switched around while keeping the same macroscopic properties (such as temperature) for the whole collection. Similar to mixing density in the particle/box example, heat moving from hot to cold always increases the number of degrees of freedom in a system. With 4 particles in 18 boxes there was a 25% chance that a mix would randomly reverse. With, say, 1023 particles in 1029 boxes, the chance of cold and hot randomly “unmixing” is so vanishingly small it will never occur in a trillion times the age of the universe. That is why increasing entropy makes a process irreversible.

|

| |

|